Transformer

Table of contents

From AutoEncoder to Transformer (?)

How does Transformer evolve today?

[2024 GPT on a Quantum Computer] Integrate quantum computing with LLM behind ChatGPT - Transformer architecture - , which introduced as Quantum Machine Learning.

[2024 Evolution Transformer: In-Context Evolutionary Optimization] Introduce Evolution Transformer, which performance improved from supervised optimization technique and updating invariance of the search distribution.

[2024 PerOS: Personalized Self-Adapting Operating Systems in the Cloud] Proposed a personalized OS ingrained with LLM capabilities - PerOS.

‘Connection’

![Schema of a basic autoencoder. [Reference from Wiki.]](Transformer%20fc5244b57a6d46d7a6cd5ed1d1b90104/Untitled.png)

Transformer is for NLP, while AutoEncoder is for image processing.

Recent research about AutoEncoder: See here in arXiv

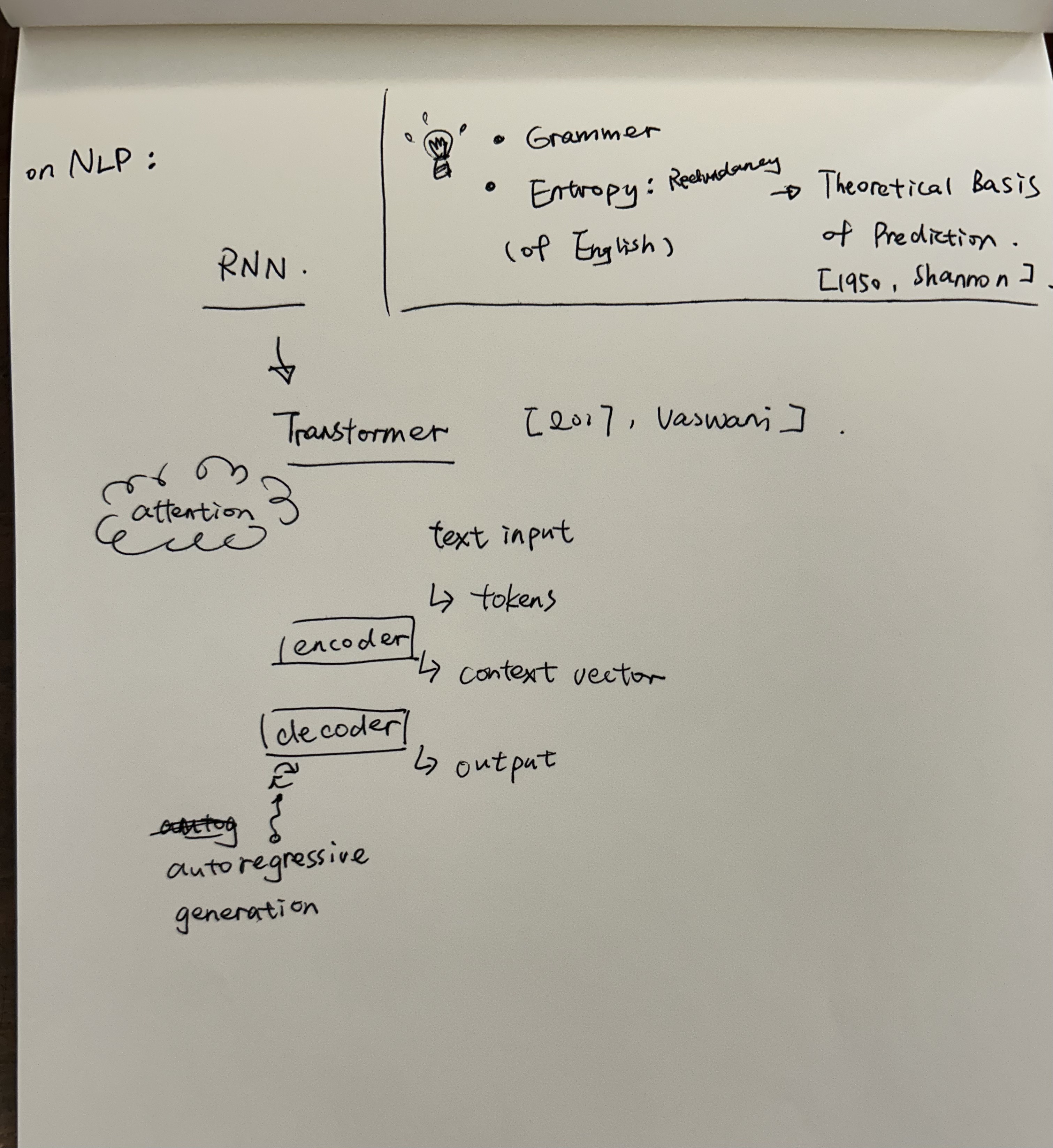

Quick review of Transformer

Reference: What are Large Language Models

[1950, Shannon] Shannon, C. E. (1951). Prediction and entropy of printed English. The Bell System Technical Journal, 30(1), 50–64. https://doi.org/10.1002/j.1538-7305.1951.tb01366.x

[2017, Vaswani] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2023). Attention Is All You Need.