On-device Machine Learning

Table of contents

Update 0618

On-device models deployment.

- For android: ML Kit, or TensorFlow Lite. See here Use ML Kit on Android. See here Use TensorFlow Lite on Android.

- For iOS: Core ML. See here Core ML Tools.

TensorFlow Lite

A library for mobile device. The workflow is to Choose or train a model → Convert it into a compressed flat buffer with Converter (xxx.tflite) → Load the tflite file into a mobile device → Quantize by converting 32-bit floats to more efficient 8-bit integers or run on GPU to optimize. Android guide is available here.

Optimize models

The objects of optimizing models are to

a) reduce the size, b) reduce the latency, c) compatible with accelerator.

But surely there are trade-off between accuracy and optimizations.

Here are some types of optimization available for TensorFlow Lite:

Quantization.

Pruning.

Clustering.

See more in reference: Model optimization .

Also, optimize operators by customizing operators could be an option for a faster model. See more here.

Google MediaPipe

Offers both prepared and customizable on-device machine learning pipelines. Models are task-oriented as well. Low-code.

Take the task of pose landmark detection as example. MediaPipe offers models (TensorFlow Lite models actually) encapsulated in xxx.task (downloaded in assets/). In Android, there is a PoseLandmarker class provides methods to detect pose landmarks. Pretty neat call. The main code focus more on the application building, instead of code about machine learning. PoseLandmarker is in package com.google.mediapipe.tasks.vision.poselandmarker. See more in here. 🍇 GitHub demo is here.

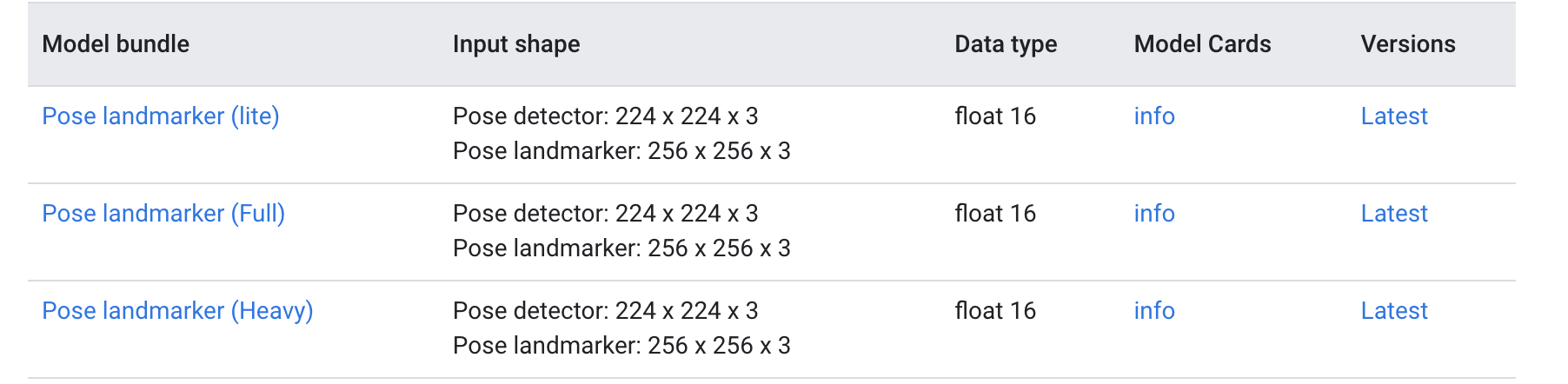

There are three models available on the task of pose landmark detection, which are _full, _heavy, _lite.

Reference: Pose landmark detection Overview.

The PoseLandmarker accepts still images, decoded video frames, and live video feed as input.

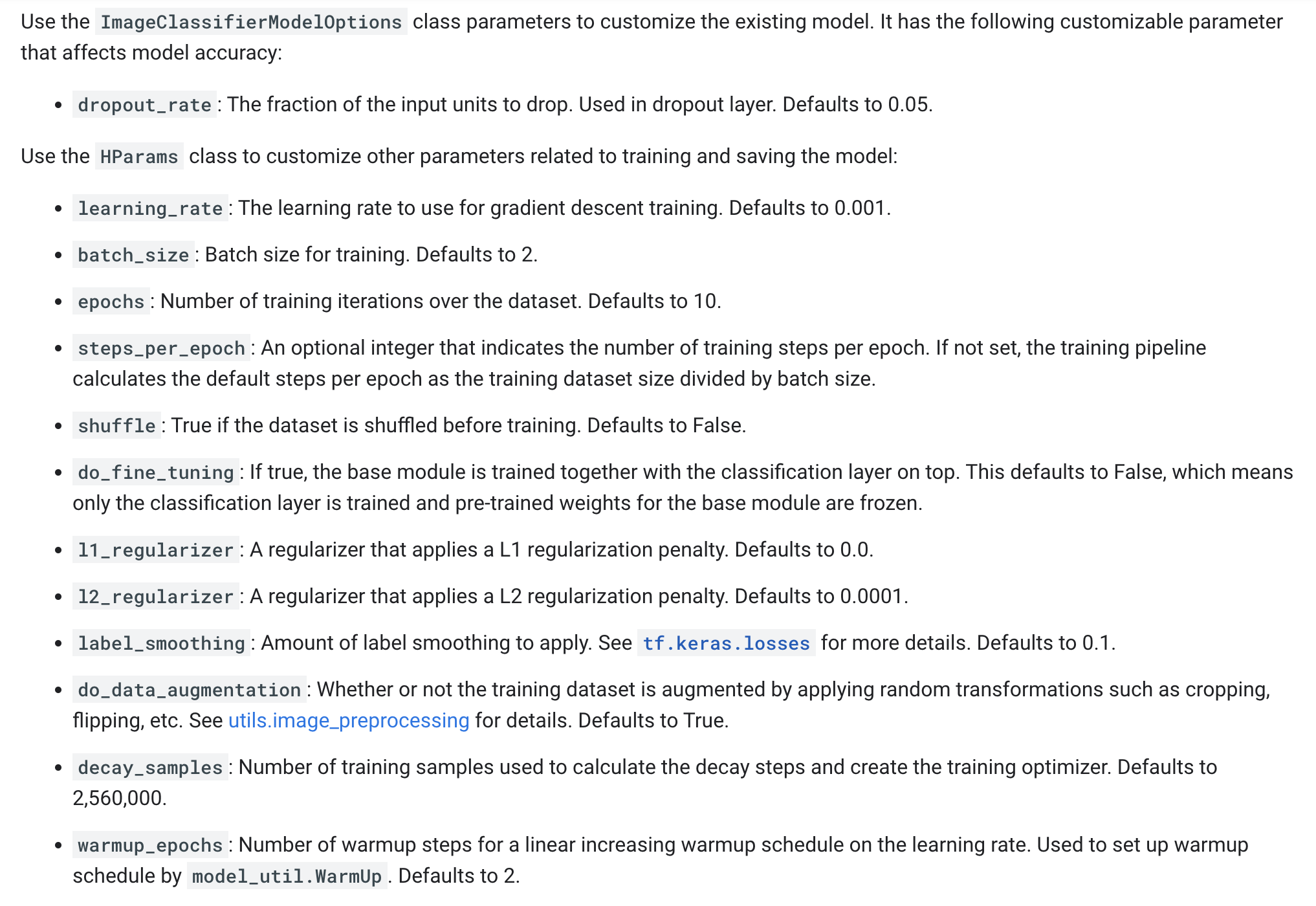

Model customization is possible on some tasks, such as image classification. See guide here.

Retraining model using Model Maker tool

See more here about MediaPipe Model Maker.

Hyper-parameters to optimize during retraining.

Post-training model quantization can help reducing the model size, able to config when exporting the model. See more in this TensorFlow Lite guide.

Google ML Kit (Older)

Offers prepared task-oriented on-device machine learning models and APIs.

Take the task of pose landmark detection as example. ML Kit utilizes TensorFlow Lite models directly (xxx.tflite, downloaded in assets/). In Android, there is a PoseDetector class (in the package com.google.mlkit.vision.pose), similar with the PoseLandmarker of MediaPipe. See more in here. 🍇 GitHub demo is here.

💡 The pose landmarker model offered by MediaPipe and ML Kit are the same, both tracks 33 body landmark locations. [They train the model in the same way.](https://blog.research.google/2020/08/on-device-real-time-body-pose-tracking.html) *BlazePose* is now MediaPipe.

Only one model (model.tflite) is pre-downloaded under assets/ in the demo project of ML Kit.